Blog Speed Optimization – Make Google & Users Happy!

Blog Speed Optimization – Make Google & Users Happy!

Revolutionizing Industries With Edge AI

Written by TeachAhead » Updated on: June 17th, 2025

The synergy between AI, cloud computing, and edge technologies is reshaping innovation. Currently, most IoT solutions rely on basic telemetry systems. These systems capture data from edge devices and store it centrally for further use. Our approach goes far beyond this conventional method.

We leverage advanced machine learning and deep learning models to solve real-world problems. These models are trained in cloud environments and deployed directly onto edge devices. Deploying AI models to the edge ensures real-time decision-making and creates a feedback loop that continuously enhances business processes, driving digital transformation.

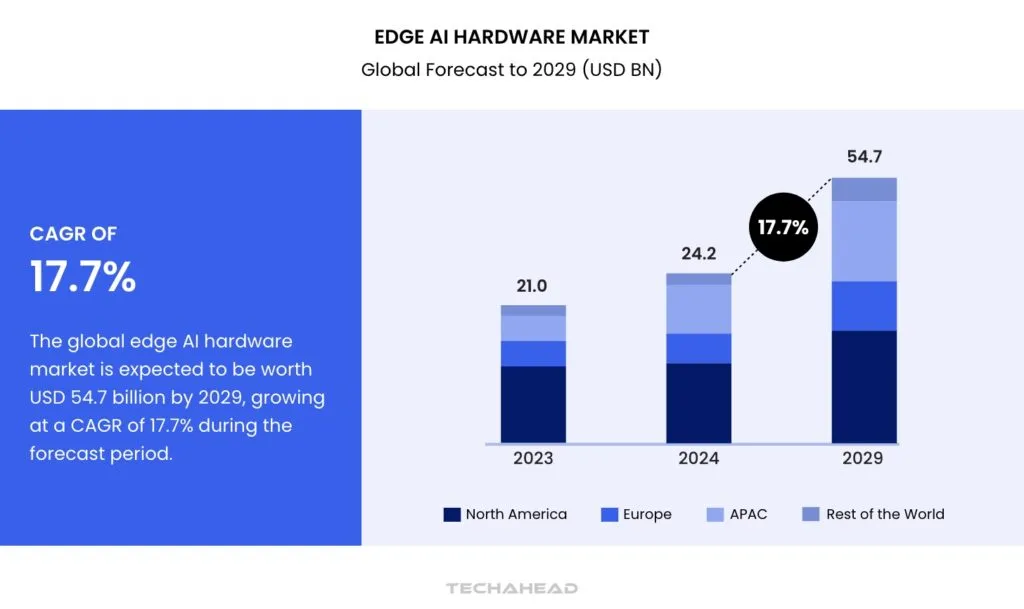

The AI in edge hardware market is set for exponential growth. Valued at USD 24.2 billion in 2024, it is expected to reach USD 54.7 billion by 2029, achieving a CAGR of 17.7%.

Similarly, the edge AI software market is forecasted to grow from USD 1.1 billion in 2023 to USD 4.1 billion by 2028, reflecting a remarkable CAGR of 30.5%.

The adoption of edge AI software development is growing due to several factors, such as the rise in IoT devices, the need for real-time data processing, and the growth of 5G networks. Businesses are using AI in edge computing to improve operations, gain insights, and fully utilize data from edge devices. Other factors driving this growth include the popularity of social media and e-commerce, deeper integration of AI into edge systems, and the increasing workloads managed by cloud computing.

The adoption of edge AI software development is growing due to several factors, such as the rise in IoT devices, the need for real-time data processing, and the growth of 5G networks. Businesses are using AI in edge computing to improve operations, gain insights, and fully utilize data from edge devices. Other factors driving this growth include the popularity of social media and e-commerce, deeper integration of AI into edge systems, and the increasing workloads managed by cloud computing.

The learning path focuses on scalable strategies for deploying AI models on devices like drones and self-driving cars. It also introduces structured methods for implementing complex AI applications.

A key part of this approach is containerization. Containers make it easier to deploy across different hardware by packaging the necessary environments for various edge devices. This approach works well with Continuous Integration and Continuous Deployment (CI/CD) pipelines, making container delivery to edge systems smoother.

This blog will help you understand how AI in edge computing can be integrated into your business. These innovations aim to simplify AI deployment while meeting the changing needs of edge AI ecosystems.

Key Takeaways:

- The integration of AI, cloud computing, and edge technologies is transforming innovation across industries. Traditional IoT solutions depend on basic telemetry systems to collect and centrally store data for processing.

- Advanced machine learning and deep learning models elevate this approach, solving complex real-world challenges. These models are trained using powerful cloud infrastructures to ensure robust performance.

- After training, the models are deployed directly onto edge devices for localized decision-making. This shift reduces latency and enhances the efficiency of IoT applications, offering smarter solutions.

What is Edge AI?

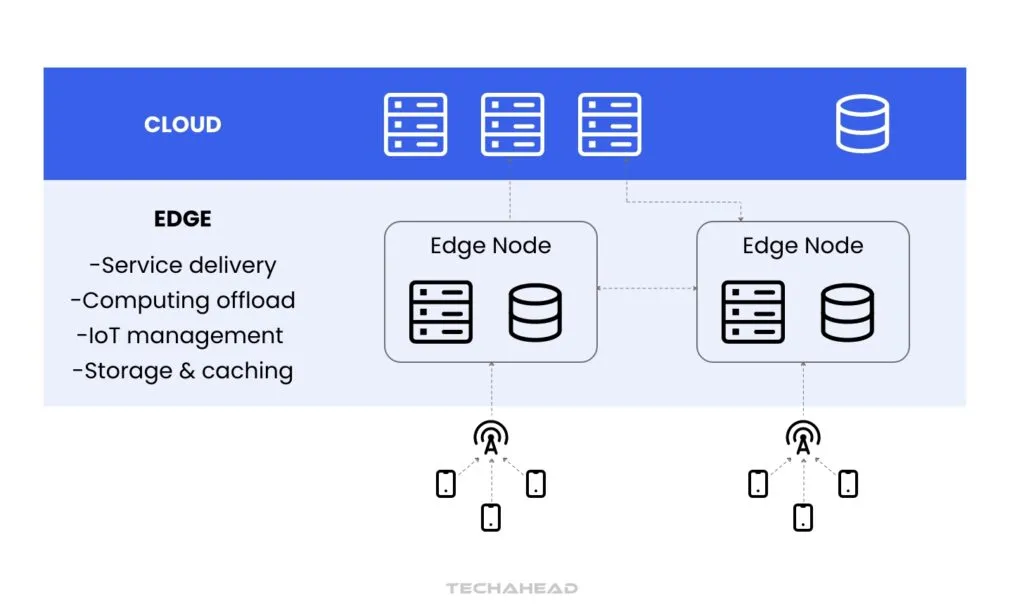

Edge AI is a system that connects AI operations between centralized data centers (cloud) and devices closer to users and their environments (the edge). Unlike traditional AI that runs mainly in the cloud, AI in edge computing focuses on decentralizing processes. This is different from older methods where AI was limited to desktops or specific hardware for tasks like recognizing check numbers.

Edge AI is a system that connects AI operations between centralized data centers (cloud) and devices closer to users and their environments (the edge). Unlike traditional AI that runs mainly in the cloud, AI in edge computing focuses on decentralizing processes. This is different from older methods where AI was limited to desktops or specific hardware for tasks like recognizing check numbers.

The edge includes physical infrastructure like network gateways, smart routers, or 5G towers. However, its real value is in enabling AI on devices such as smartphones, autonomous cars, and robots. Instead of being just about hardware, AI in edge computing is a strategy to bring cloud-based innovations into real-world applications.

Recent advancements at the edge have improved how devices like computers, appliances, and vehicles work and are managed. Edge AI takes these improvements further by using cloud-based techniques in data science, machine learning, and AI beyond centralized systems.

AI in edge computing technology enables machines to mimic human intelligence, allowing them to perceive, interact, and make decisions autonomously. To achieve these complex capabilities, it relies on a structured life cycle that transforms raw data into actionable intelligence.

AI in edge computing technology enables machines to mimic human intelligence, allowing them to perceive, interact, and make decisions autonomously. To achieve these complex capabilities, it relies on a structured life cycle that transforms raw data into actionable intelligence.

The Role of Deep Neural Networks (DNN)

At the core of AI in edge computing are deep neural networks, which replicate human cognitive processes through layered data analysis. These networks are trained using a process called deep learning. During training, vast datasets are fed into the model, allowing it to identify patterns and produce accurate outputs. This intensive learning phase often occurs in cloud environments or data centers, where computational resources and collaborative expertise from data scientists are readily available.

From Training to Inference

Once a deep learning model is trained, it transitions into an inference engine. The inference engine uses its learned capabilities to analyze new data and provide actionable insights. Unlike the training phase, which requires centralized resources, the inference stage operates locally on devices. This shift enables real-time decision-making, even in remote environments, making it ideal for edge AI deployments in industries like manufacturing, healthcare, and autonomous vehicles.

Real-World Applications

Edge AI operates on decentralized devices such as factory robots, hospital equipment, autonomous cars, satellites, and smart home systems. These devices run inference engines that analyze data and generate insights directly at the point of origin, minimizing dependency on cloud systems.

When AI in edge computing encounters complex challenges or anomalies, the problematic data is sent to the cloud for retraining. This iterative feedback loop enhances the original AI model’s accuracy and efficiency over time. Consequently, Edge AI systems continuously evolve, becoming more intelligent and responsive with each iteration.

Why Does the Feedback Loop Matters?

The feedback loop is a cornerstone of Edge AI’s success. It enables edge devices to identify and address gaps in their understanding by sending troublesome data to centralized systems for refinement. These improvements are reintegrated into the edge inference engines, ensuring that deployed models consistently improve in accuracy and performance.

What Does Edge AI Look Like Today?

Edge AI integrates edge computing with artificial intelligence to redefine data processing and decision-making. Unlike traditional systems, AI in edge computing operates directly on localized devices like Internet of Things (IoT) devices or edge servers. This minimizes reliance on remote data centers, ensuring efficient data collection, storage, and processing at the device level.

By leveraging machine learning, AI in edge computing mimics human reasoning, enabling devices to make independent decisions without constant internet connectivity.

Localized Processing for Real-Time Intelligence

Edge AI transforms conventional data processing models into decentralized operations. Instead of sending data to remote servers, it processes information locally. This approach improves response times and reduces latency, which is vital for time-sensitive applications. Local processing also enhances data privacy, as sensitive information doesn’t need to leave the device.

Devices Empowered by Independence

Edge AI empowers devices like computers, IoT systems, and edge servers to operate autonomously. These devices don’t need an uninterrupted internet connection. This independence is crucial in areas with limited connectivity or for tasks requiring uninterrupted functionality. The result is smarter, more resilient systems capable of decision-making at the edge.

Practical Application in Everyday Life

Virtual assistants like Google Assistant, Apple’s Siri, and Amazon Alexa exemplify edge AI’s capabilities. These tools utilize machine learning to analyze user commands in real-time. They begin processing as soon as a user says, “Hey,” capturing data locally while interacting with cloud-based APIs. AI in edge computing enables these assistants to learn and store knowledge directly on the device, ensuring faster, context-aware responses.

Enhanced User Experience

With AI in edge computing, devices deliver seamless and personalized interactions. By learning locally, systems can adapt to user preferences while maintaining high performance. This ensures users experience faster, contextually aware services, even in offline scenarios.

What Might Edge AI Look Like in the Future?

Edge AU is poised to redefine how intelligent systems interact with the world. Beyond current applications like smartphones and wearables, its future will likely include advancements in more complex, real-time systems. Emerging examples span autonomous vehicles, drones, robotics, and video-analytics-enabled surveillance cameras. These technologies leverage data at the edge, enabling instant decision-making that aligns with real-world dynamics.

Revolutionizing Transportation

Self-driving vehicles are a glimpse into the transformative power of AI in edge computing. These cars process visual and sensor data in real time. They assess road conditions, nearby vehicles, and pedestrians while adapting to sudden changes like inclement weather. By integrating edge AI, autonomous cars deliver rapid, accurate decisions without relying solely on cloud computing. This ensures safety and efficiency in high-stakes environments.

Elevating Automation and Surveillance

Drones and robots equipped with edge AI are reshaping automation. Drones utilize edge AI to navigate complex environments autonomously, even in areas without connectivity. Similarly, robots apply localized intelligence to execute intricate tasks in industries like manufacturing and logistics. Surveillance cameras with edge AI analyze video feeds instantly, identifying threats or anomalies with minimal latency. This boosts operational security and situational awareness.

Unprecedented Growth Trajectory

The AI in edge computing ecosystem is set for exponential growth in the coming years. Market projections estimate the global edge computing market will reach $61.14 billion by 2028. This surge reflects industries’ increasing reliance on intelligent systems that operate independently of centralized infrastructures.

Empowering Smarter Ecosystems

Edge AI will enhance its role in creating interconnected systems that adapt dynamically. It will empower devices to process and act on complex data. This evolution will foster breakthroughs across sectors like healthcare, automotive, security, and energy.

The future of edge AI promises unmatched efficiency, scalability, and innovation. As its adoption accelerates, edge AI will continue to drive technological advancements, creating smarter, more resilient systems for diverse industries.

Understanding the Advantages and Disadvantages of Edge AI

Edge computing and Edge AI are shaping the future of data flow management. With the exponential rise in data from business operations, innovative approaches to handle this surge have become essential.

Edge computing addresses this challenge by processing and storing data near end users. This localized approach alleviates pressure on centralized servers, reducing the volume of data routed to the cloud. The integration of AI with Edge computing has introduced Edge AI, a transformative solution that maximizes the benefits of reduced latency, bandwidth efficiency, and offline functionality.

However, like any emerging technology, Edge AI has both advantages and limitations. Businesses must weigh these factors to determine its suitability for their operations.

Key Advantages of Edge AI

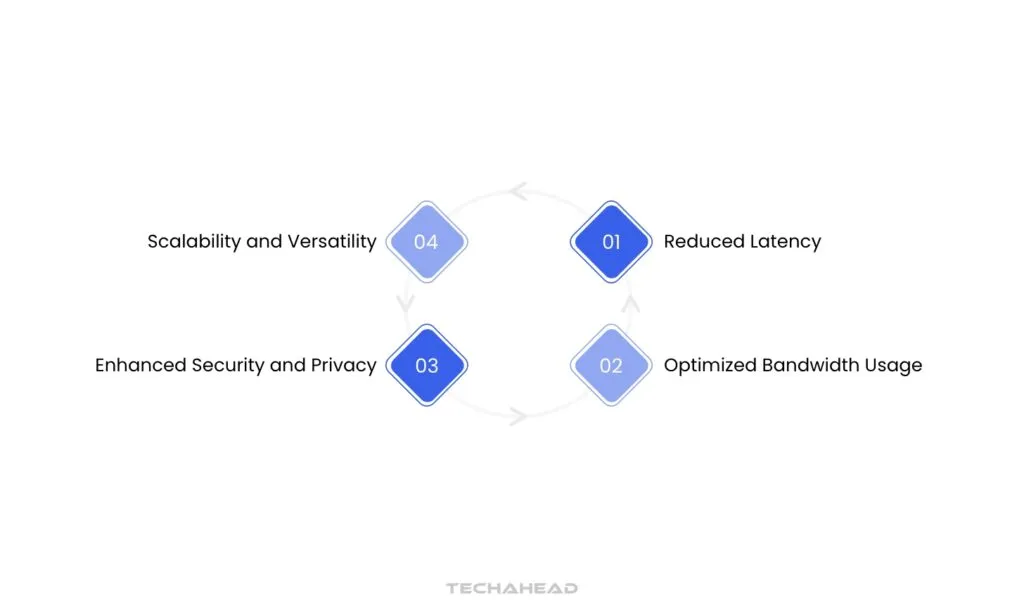

Reduced Latency

Edge AI significantly reduces latency by processing data locally instead of relying on distant cloud platforms. This enables quicker decision-making, as data doesn’t need to travel back and forth between the cloud and devices. Additionally, cloud platforms remain free for more complex analytics and computational tasks, ensuring better resource allocation.

Optimized Bandwidth Usage

Edge AI minimizes bandwidth consumption by processing, analyzing, and storing most data locally on Edge-enabled devices. This localized approach reduces the volume of data sent to the cloud, cutting operational costs while improving overall system efficiency.

Enhanced Security and Privacy

By decentralizing data storage, Edge AI reduces reliance on centralized repositories, lowering the risk of large-scale breaches. Localized processing ensures sensitive information stays within the edge network. When cloud integration is required, redundant or unnecessary data is filtered out, ensuring only critical information is transmitted.

Scalability and Versatility

The proliferation of Edge-enabled devices simplifies system scalability. Many Original Equipment Manufacturers (OEMs) now embed native Edge capabilities into their products. This trend facilitates seamless expansion while allowing local networks to operate independently during disruptions in upstream or downstream systems.

Potential Challenges of Edge AI

Risk of Data Loss

Poorly designed Edge AI systems may inadvertently discard valuable information, leading to flawed analyses. Effective planning and programming are critical to ensuring only irrelevant data is filtered out while preserving essential insights for future use. Localized Security Vulnerabilities

While Edge AI enhances cloud-level security, it introduces risks at the local network level. Weak access controls, poor password management, and human errors can create entry points for cyber threats. Implementing robust security protocols at every level of the system is essential to mitigating such vulnerabilities.

Limited Computing Power

Edge AI lacks the computational capabilities of cloud platforms, making it suitable only for specific AI tasks. For example, Edge devices are effective for on-device inference and lightweight learning tasks. However, large-scale model training and complex computations still rely on the superior processing power of cloud-based AI systems.

Device Variability and Reliability Issues

Edge AI systems often depend on a diverse range of devices, each with varying capabilities and reliability. This variability increases the risk of hardware failures or performance inconsistencies. Comprehensive testing and compatibility assessments are essential to mitigate these challenges and ensure system reliability.

Edge AI Use Cases and Industry Examples

AI in edge computing is transforming industries with innovative applications that bridge cloud computing and real-time local operations. Here are key cases and practical implementations of edge AI.

Enhanced Speed Recognition

Edge AI enables mobile devices to transcribe speech instantly without relying on constant cloud connectivity. This ensures faster, more private communication while enhancing user experience through seamless functionality.

Biometric Security Solutions

Edge AI powers fingerprint detection and face-ID systems, ensuring secure authentication directly on devices. This eliminates latency concerns, enhancing both security and efficiency in personal and enterprise applications.

Revolutionizing Autonomous Vehicles

Autonomous navigation systems utilize edge AI for real-time decision-making. AI models are trained in the cloud, but vehicles execute these models locally for tasks like steering and braking. Self-driving systems improve continuously as data from unexpected human interventions is uploaded to refine cloud-based algorithms. Updated models are then deployed to all vehicles in the fleet, ensuring collective learning.

Intelligent Image Processing

Google’s AI leverages edge computing to automatically generate realistic backgrounds in photos. By processing images locally, the system achieves faster results while maintaining the quality of edits, enabling a seamless creative experience for users.

Advanced Wearable Health Monitoring

Wearables use edge AI to analyze heart rate, blood pressure, glucose levels, and breathing locally. Cloud-trained AI models deployed on these devices provide real-time health insights, promoting proactive healthcare without requiring continuous cloud interactions.

Marter Robotics

Robotic systems employ edge AI to enhance operational efficiency. For instance, a robot arm learns optimized ways to handle packages. It shares its findings with the cloud, enabling updates that improve the performance of other robots in the network. This approach accelerates innovation across robotics systems.

Adaptive Traffic Management

Edge AI drives smart traffic cameras that adjust light timings based on real-time traffic conditions. This reduces congestion, improves flow, and enhances urban mobility by processing data locally for instant action.

Difference Between Edge AI Vs Cloud AI

The evolution of edge AI and cloud AI stems from shifts in technology and development practices over time. Before the emergence of the cloud or edge, computing revolved around mainframes, desktops, smartphones, and embedded systems. Application development was slower, adhering to Waterfall methodologies that required bundling extensive functionality into annual updates.

The evolution of edge AI and cloud AI stems from shifts in technology and development practices over time. Before the emergence of the cloud or edge, computing revolved around mainframes, desktops, smartphones, and embedded systems. Application development was slower, adhering to Waterfall methodologies that required bundling extensive functionality into annual updates.

The advent of cloud computing revolutionized workflows by automating data center processes. Agile practices replaced rigid Waterfall models, enabling faster iterations. Modern cloud-based applications now undergo multiple updates daily. This modular approach enhances flexibility and efficiency. Edge AI builds on this innovation, extending these Agile workflows to edge devices like smartphones, smart appliances, and factory equipment.

Modular Development Beyond the Cloud

While cloud AI centralizes functionality, edge AI brings intelligence to the periphery of networks. It allows mobile phones, vehicles, and IoT devices to process and act on data locally. This decentralization drives faster decision-making and enhanced real-time responsiveness.

Degrees of Implementation

The integration of edge AI varies by device. Basic edge devices, like smart speakers, send data to the cloud for inference. More advanced setups, such as 5G access servers, host AI capabilities that serve multiple nearby devices. LF Edge, an initiative by the Linux Foundation, categorizes edge devices into types like lightbulbs, on-premises servers, and regional data centers. These represent the growing versatility of edge AI across industries.

Collaborative Edge-Cloud Ecosystem

Edge AI and cloud AI complement each other seamlessly. In some cases, edge devices transmit raw data to the cloud, where inferencing is performed, and results are sent back. Alternatively, edge devices can run inference locally using models trained in the cloud. Advanced implementations even allow edge devices to assist in training AI models, creating a dynamic feedback loop that enhances overall AI accuracy and functionality.

Enhancing AI Across Scales

By integrating edge AI, organizations capitalize on local processing power while leveraging cloud scalability. This symbiosis ensures optimal performance for applications requiring both immediate insights and large-scale analytics.

Conclusion

Edge AI stands as a transformative force, bridging the gap between centralized cloud intelligence and real-time edge processing. Its ability to decentralize AI workflows has unlocked unprecedented opportunities across industries, from healthcare and transportation to security and automation. By reducing latency, enhancing data privacy, and empowering devices with autonomy, Edge AI is revolutionizing how businesses harness intelligence at scale.

However, successful implementation requires balancing its advantages with potential challenges. Businesses must adopt scalable strategies, robust security measures, and effective device management to fully realize its potential.

As Edge AI continues to evolve, it promises to redefine industries, driving smarter ecosystems and accelerating digital transformation. Organizations that invest in this technology today will be better positioned to lead in an era where real-time insights and autonomous systems dictate the pace of innovation.

Whether it’s powering autonomous vehicles, optimizing operations, or enhancing user experiences, Edge AI is not just a technological shift; it’s a paradigm change shaping the future of intelligent systems. Embrace Edge AI today to stay ahead in the dynamic landscape of innovation.

Source URL: https://www.techaheadcorp.com/blog/revolutionizing-industries-with-edge-ai/

Note: IndiBlogHub features both user-submitted and editorial content. We do not verify third-party contributions. Read our Disclaimer and Privacy Policyfor details.

Copyright © 2019-2025 IndiBlogHub.com. All rights reserved. Hosted on DigitalOcean for fast, reliable performance.