Data Lake Architecture: Unlocking the Power of Big Data

Strong 8k brings an ultra-HD IPTV experience to your living room and your pocket.

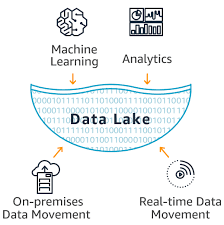

Introduction to Data Lake Architecture

In the realm of big data management, businesses are increasingly turning to data lake architecture as a comprehensive solution for storing, managing, and analyzing vast amounts of diverse data. But what exactly is a data lake? Simply put, a data lake is a centralized repository that allows organizations to store structured, semi-structured, and unstructured data at scale, without the need for pre-defined schemas. This flexibility makes data lakes ideal for handling the volume, variety, and velocity of modern data sources.

Components of Data Lake Architecture

Data Sources

The foundation of any data lake architecture lies in its data sources. These can include everything from transactional databases and log files to social media feeds and sensor data. By ingesting data from a wide range of sources, organizations can gain a holistic view of their operations and customer interactions.

Data Ingestion

Once data sources have been identified, the next step is ingesting that data into the data lake. This process involves extracting data from its source systems, transforming it into a format suitable for storage and analysis, and loading it into the data lake. Automated ingestion pipelines streamline this process, ensuring that data is continuously updated and available for analysis in near real-time.

Storage Layer

The storage layer of a data lake architecture is where the ingested data is stored. Unlike traditional data warehouses, which require data to be structured before storage, data lakes store raw data in its original format. This raw data is then organized using metadata tags, making it easily discoverable and accessible to users.

Data Processing

Data processing is the heart of any data lake architecture, enabling organizations to extract insights and derive value from their data. Technologies such as Apache Hadoop and Apache Spark provide scalable frameworks for processing large volumes of data in parallel, while tools like Apache Hive and Apache Pig facilitate querying and analysis.

Data Access

The final component of data lake architecture is data access. This encompasses the tools and interfaces that enable users to interact with the data lake, from business intelligence dashboards and data visualization tools to custom analytics applications. By providing self-service access to data, organizations empower users to explore data and derive insights independently.

Design Considerations for Data Lake Architecture

Building an effective data lake architecture requires careful consideration of several key factors:

Scalability

As data volumes continue to grow exponentially, scalability is essential for ensuring that the data lake can handle increasing workloads without performance degradation.

Flexibility

Data lakes should be designed to accommodate a wide range of data types and formats, allowing organizations to adapt to evolving business requirements and data sources.

Security

Protecting sensitive data is paramount in any data lake architecture. Robust security measures, including encryption, access controls, and auditing, help mitigate the risk of data breaches and unauthorized access.

Metadata Management

Effective metadata management is critical for ensuring that data remains discoverable and usable within the data lake. Metadata tags should accurately describe the contents, context, and lineage of each data asset.

Benefits of Implementing Data Lake Architecture

The adoption of data lake architecture offers several compelling benefits for organizations:

Centralized Data Repository

By consolidating data from disparate sources into a single repository, data lakes provide a unified view of organizational data, enabling more comprehensive analysis and decision-making.

Cost-Effectiveness

Compared to traditional data warehouses, which require upfront investment in infrastructure and data modeling, data lakes offer a more cost-effective solution for storing and analyzing large volumes of data.

Agility and Innovation

The flexibility and scalability of data lake architecture enable organizations to rapidly prototype and deploy new data-driven applications and services, driving innovation and competitive advantage.

Challenges in Data Lake Architecture

Despite its many benefits, implementing and managing a data lake architecture presents several challenges:

Data Governance

Maintaining data quality, consistency, and compliance across the data lake can be challenging, particularly in organizations with diverse data sources and stakeholders.

Data Quality

Ensuring the accuracy, completeness, and reliability of data within the data lake is essential for deriving meaningful insights and making informed decisions.

Complexity

The complexity of data lake architecture, including the integration of disparate technologies and data management processes, can pose significant challenges for organizations with limited expertise or resources.

Best Practices for Building and Managing Data Lake Architecture

To overcome these challenges and maximize the value of their data lake investments, organizations should follow these best practices:

Establish Clear Objectives

Define clear business objectives and use cases for the data lake to guide its design, implementation, and ongoing management.

Choose the Right Technologies

Select technologies and tools that align with the organization's requirements, considering factors such as scalability, interoperability, and ease of use.

Implement Data Governance Policies

Develop and enforce data governance policies to ensure data quality, security, and compliance throughout the data lifecycle.

Monitor and Optimize Performance

Regularly monitor the performance of the data lake, identifying areas for optimization and improvement to maintain optimal performance and scalability.

Real-World Examples of Successful Data Lake Implementations

Numerous organizations across industries have successfully implemented data lake architecture to drive innovation, improve decision-making, and achieve business objectives. Some notable examples include:

• Netflix: Utilizes a data lake architecture to analyze user behavior, personalize content recommendations, and optimize streaming performance.

• GE Healthcare: Leverages a data lake to aggregate and analyze healthcare data from various sources, enabling more personalized patient care and predictive analytics.

• Uber: Utilizes a data lake to store and analyze vast amounts of ride and driver data, improving operational efficiency, and customer experience.

Conclusion

In conclusion, data lake architecture represents a powerful and flexible approach to managing big data, enabling organizations to unlock the full potential of their data assets. By integrating data from diverse sources, processing it at scale, and providing self-service access to users, data lakes empower organizations to derive actionable insights and drive innovation. However, building and managing a successful data lake architecture requires careful planning, investment, and ongoing governance. By following best practices and learning from real-world examples, organizations can harness the power of data lake architecture to stay competitive in today's data-driven economy.

Unique FAQs

1. What is the difference between a data lake and a data warehouse?

• While both data lakes and data warehouses are used for storing and analyzing data, they differ in their approach to data storage and processing. Data warehouses require data to be structured before storage, making them suitable for structured, transactional data. In contrast, data lakes store raw data in its original format, making them more flexible and scalable for handling diverse data types and sources.

2. How can organizations ensure data quality in a data lake?

• Ensuring data quality in a data lake requires implementing robust data governance policies and procedures. This includes establishing data quality standards, conducting data profiling and cleansing activities, and implementing data validation and monitoring processes.

3. What are some common use cases for data lake architecture?

• Data lake architecture is commonly used for a variety of use cases, including big data analytics, machine learning and AI, IoT data processing, real-time analytics, and data warehousing modernization.

4. What are the potential security risks associated with data lake architecture?

• Some potential security risks associated with data lake architecture include unauthorized access to sensitive data, data breaches, data loss or corruption, and regulatory compliance violations. Implementing robust security measures, such as encryption, access controls, and monitoring, can help mitigate these risks.

5. How can organizations measure the ROI of a data lake implementation?

• Measuring the ROI of a data lake implementation requires defining clear metrics and KPIs aligned with business objectives, such as improved decision-making, cost savings, revenue generation, and operational efficiency. Organizations can then track and analyze these metrics over time to assess the impact of the data lake on their bottom line.

Note: IndiBlogHub features both user-submitted and editorial content. We do not verify third-party contributions. Read our Disclaimer and Privacy Policyfor details.