Website Copy Makeover – Turn Visitors into Buyers Instantly!

Website Copy Makeover – Turn Visitors into Buyers Instantly!

Securing the Future: Protecting AI Chips Against Vulnerabilities in Autonomous Systems

Written by Nimit » Updated on: February 13th, 2025

Introduction:

The Artificial Intelligence (AI) chip sector has undergone rapid advancements in recent years, driven by the growing need for faster, more efficient hardware solutions that can handle the complex demands of AI algorithms. AI chips are designed to accelerate AI workloads, such as machine learning, deep learning, and neural networks, enabling real-time processing and decision-making across industries. From general-purpose processors to specialized chips, the evolution of AI hardware has led to innovations that enhance performance, power efficiency, and scalability.

As the AI landscape continues to evolve, so too must the security of the hardware that powers AI systems. One of the key concerns in the development of AI chips is the protection of these systems against potential vulnerabilities, especially in autonomous systems. These vulnerabilities can be exploited by malicious actors, compromising the integrity, safety, and privacy of AI-driven applications. In this article, we will explore the evolution of cutting-edge technologies in the AI chip sector, with a focus on AI chip security and the protection of autonomous systems from vulnerabilities.

The Growth of AI Chips

AI chips have come a long way from their early days. Initially, AI computations were carried out using general-purpose processors, such as Central Processing Units (CPUs) and Graphics Processing Units (GPUs). While CPUs were versatile, they were not optimized for the parallel processing required for AI tasks. GPUs, on the other hand, excelled at parallel computation, making them ideal for deep learning and other AI workloads.

However, as AI applications grew more complex and required real-time processing of massive datasets, the need for more specialized hardware became apparent. This led to the development of AI accelerators, custom chips, and specialized hardware designed specifically to handle AI workloads. These chips include Field-Programmable Gate Arrays (FPGAs), Application-Specific Integrated Circuits (ASICs), and Neural Network Processors (NNPs), each offering unique benefits for different AI tasks.

While these specialized chips have brought significant improvements in speed and efficiency, they have also introduced new challenges, particularly around security. The increasing reliance on AI systems in critical industries such as healthcare, autonomous vehicles, and finance makes it essential to ensure the security of the AI chips that power these systems.

AI Chip Security: A Growing Concern

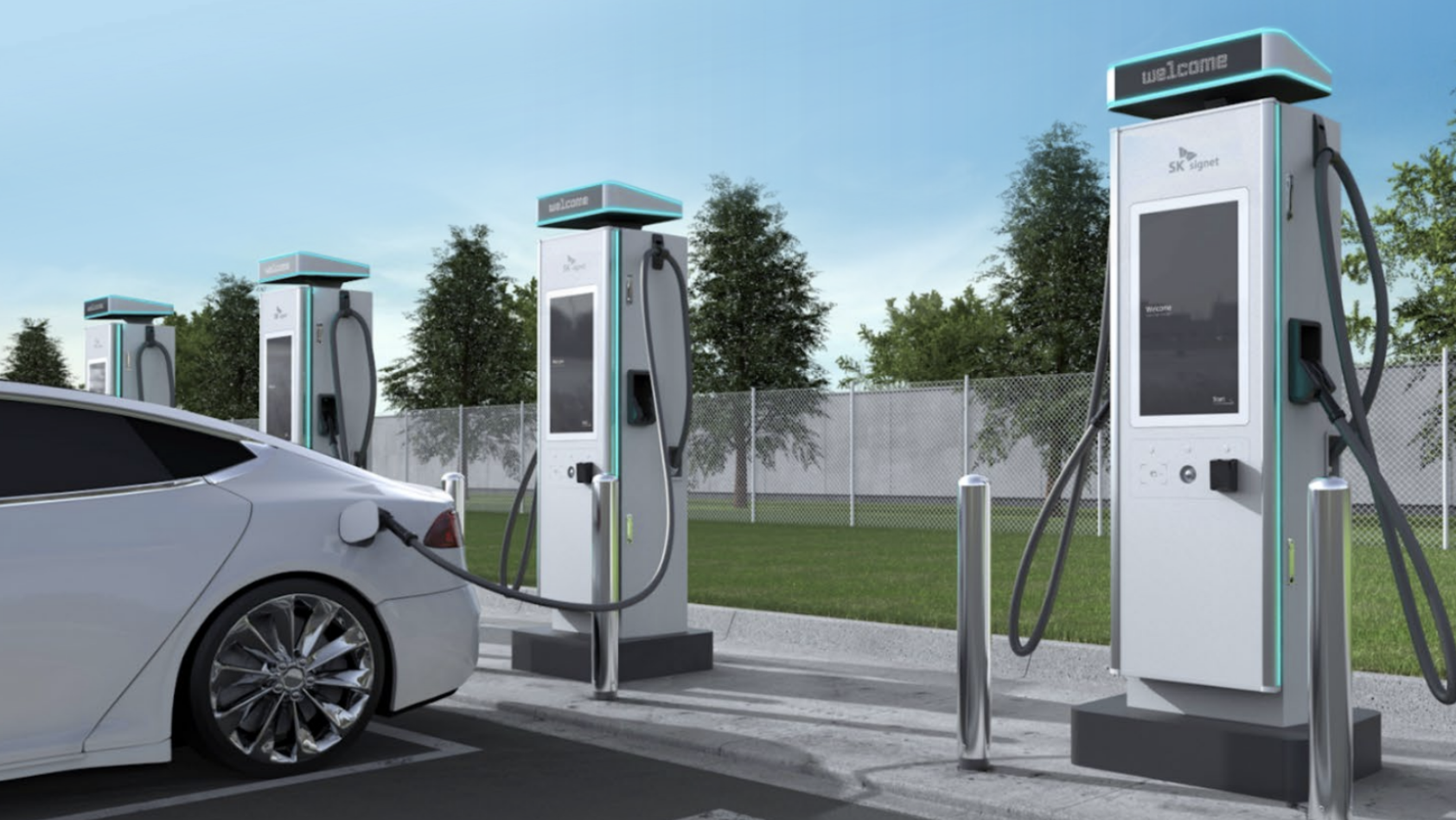

The security of AI chips is a critical issue, especially as autonomous systems become more widespread. Autonomous systems, such as self-driving cars, drones, and industrial robots, rely heavily on AI chips to process vast amounts of data in real-time. These systems are designed to make decisions based on the data they receive, and any compromise in their security could have disastrous consequences.

AI chips face several unique security challenges, including:

1. Vulnerabilities in Hardware

AI chips, like any hardware, are susceptible to vulnerabilities that can be exploited by attackers. These vulnerabilities may arise from design flaws, manufacturing defects, or weak security protocols. Malicious actors could exploit these vulnerabilities to gain unauthorized access to the chip, bypass security measures, or inject malicious code into the system.

In autonomous systems, this could lead to a range of issues, from data breaches and privacy violations to more serious consequences, such as the manipulation of decision-making processes or the hijacking of control systems. For example, a compromised AI chip in an autonomous vehicle could manipulate sensor data, leading the vehicle to make dangerous decisions.

2. Data Privacy and Integrity

AI chips process vast amounts of sensitive data, including personal information, financial transactions, and health data. Protecting this data from unauthorized access and tampering is critical for ensuring the privacy and integrity of AI systems. If an attacker can compromise the chip, they could access sensitive data, manipulate it, or even erase it.

In the context of autonomous systems, data privacy and integrity are especially important. Autonomous vehicles, for instance, rely on data from sensors and cameras to navigate and make decisions. If this data is tampered with or stolen, the vehicle could be forced to make incorrect decisions, potentially endangering lives.

3. Side-Channel Attacks

Side-channel attacks involve exploiting unintended leaks of information from a system's physical implementation, such as power consumption, electromagnetic emissions, or timing data. These attacks can be used to extract sensitive information from an AI chip, such as encryption keys or algorithms. In the case of autonomous systems, side-channel attacks could allow attackers to gain access to critical components of the system, compromising its security.

4. Software and Firmware Vulnerabilities

In addition to hardware vulnerabilities, AI chips are also susceptible to software and firmware vulnerabilities. Software running on AI chips, such as the operating system, drivers, and applications, can contain bugs or security flaws that attackers can exploit. For example, a vulnerability in the firmware of an AI chip could allow an attacker to execute malicious code, bypass security measures, or alter the chip’s behavior.

Given that AI systems often rely on software updates and patches to fix security issues, the integrity of the software running on AI chips is crucial. If attackers can tamper with the software or firmware updates, they could introduce malicious code into the system, leading to vulnerabilities.

Protecting AI Chips Against Vulnerabilities

As the security risks associated with AI chips continue to grow, various approaches are being explored to protect these systems from vulnerabilities. From hardware-based security measures to software-based solutions, the AI chip industry is focused on developing technologies to safeguard autonomous systems and ensure the integrity of AI applications.

1. Hardware Security Modules (HSMs)

Hardware Security Modules (HSMs) are specialized devices used to protect cryptographic keys and sensitive data in AI chips. HSMs are designed to prevent unauthorized access to key data, ensuring that encryption keys and other sensitive information are securely stored and processed within the chip. By integrating HSMs into AI chips, manufacturers can provide an additional layer of security against attacks that attempt to extract sensitive data.

In autonomous systems, HSMs can be used to protect communication between AI chips and other components, ensuring that data is transmitted securely and preventing attackers from tampering with the information. For example, in autonomous vehicles, HSMs can protect sensor data and ensure that control commands are not intercepted or altered by malicious actors.

2. Trusted Execution Environments (TEEs)

Trusted Execution Environments (TEEs) are isolated areas within a chip that are designed to run code in a secure environment, separate from the rest of the system. TEEs provide a safe space for sensitive data and operations, ensuring that they cannot be accessed or tampered with by unauthorized entities. By using TEEs, AI chips can isolate critical functions, such as encryption or authentication, from the rest of the system, making it more difficult for attackers to compromise the chip’s security.

In autonomous systems, TEEs can be used to protect the core decision-making algorithms and sensor data, preventing attackers from manipulating the system’s behavior. For example, in an autonomous vehicle, TEEs can safeguard the algorithms that process sensor data and make navigation decisions, ensuring that the vehicle operates safely even in the presence of external threats.

3. AI Chip Authentication and Secure Boot

AI chip authentication is another important security measure that ensures only authorized components can interact with the chip. Secure boot processes help verify the integrity of the chip’s firmware and software during startup, ensuring that no malicious code is running when the system boots up. By incorporating secure boot and authentication mechanisms, AI chips can prevent attackers from tampering with the chip’s code or firmware.

In autonomous systems, secure boot and authentication are critical for ensuring that the system operates only with trusted software and hardware. These measures help prevent unauthorized components from being added to the system, reducing the risk of attacks that could compromise the chip’s functionality.

4. AI Chip Monitoring and Intrusion Detection

Continuous monitoring of AI chip behavior is essential for detecting and responding to security threats in real-time. Intrusion detection systems can be integrated into AI chips to monitor for signs of malicious activity, such as unusual power consumption or unauthorized data access. By continuously analyzing the chip’s behavior, these systems can quickly identify potential security breaches and take action to mitigate the risk.

In autonomous systems, real-time monitoring is essential for detecting attacks that could compromise the system’s safety. For example, in an autonomous vehicle, monitoring systems can detect if an attacker is attempting to manipulate sensor data or hijack the vehicle’s control systems, triggering an immediate response to protect the vehicle and its passengers.

5. AI-Specific Encryption Techniques

AI chips can also benefit from encryption techniques specifically designed for AI workloads. Traditional encryption methods, such as RSA or AES, are often too slow to handle the large volumes of data processed by AI systems. As a result, AI-specific encryption techniques are being developed to protect sensitive data while maintaining high performance.

For example, homomorphic encryption allows AI chips to perform computations on encrypted data, ensuring that sensitive information remains protected throughout the processing cycle. This technique is particularly useful for applications that involve sensitive data, such as healthcare and finance, where data privacy is a top concern.

Conclusion

The evolution of AI chips has brought about significant improvements in performance, efficiency, and scalability, enabling AI systems to handle increasingly complex workloads. However, as AI technology continues to advance, the security of AI chips becomes a critical concern. Protecting AI chips from vulnerabilities, especially in autonomous systems, is essential to ensuring the integrity, safety, and privacy of AI-driven applications.

By leveraging hardware security modules, trusted execution environments, secure boot processes, and AI-specific encryption techniques, manufacturers can bolster the security of AI chips and protect them from potential threats. As AI chips continue to power innovations across industries, ensuring their security will be essential to the success of autonomous systems and the future of AI technology.

Note: IndiBlogHub features both user-submitted and editorial content. We do not verify third-party contributions. Read our Disclaimer and Privacy Policyfor details.

Copyright © 2019-2025 IndiBlogHub.com. All rights reserved. Hosted on DigitalOcean for fast, reliable performance.